Responsible AI in Practice: Accountability, Reliability, Inclusivity and Fairness

Artificial Intelligence (AI) operates in the real world: with real employees, real customers, real decisions, and real impacts. As companies transition from the realm of AI theory to AI practice, these impacts can make or break an investment. Fortunately, Responsible AI helps organizations navigate the murky waters.

The business case for Responsible AI (RAI) is clear. Without it, AI's value to an organization diminishes while its risk increases. Yet, when many of these companies discuss Responsible AI, the conversation often stays abstract. They create a disconnected set of AI governance policies that fall short of providing guidance to the people in their company who are making difficult choices about real-world projects.

In this two-part blog series, our goal is to provide emerging means and strategies for operationalizing and assessing the responsibility of AI implementations and its usage. For this first blog, we will discuss four of the eight principles of responsible AI: accountability, reliability, inclusiveness, and fairness. The other four—transparency, security and privacy, sustainability, and governance—will appear in a follow-on blog post. Both blogs aim at making Responsible AI practical, moving it from theoretical concepts to a part of everyday decision-making in the digital business environment.

Before we move forward, it's crucial to understand that although the principles and goals of Responsible AI remain relatively constant, the specific goals, technologies, and strategies for supporting and managing these capabilities are changing quickly. Furthermore, every organization is unique, so what might work for monitoring accountability and assessing inclusiveness, as an example, for one organization might not be effective in another.

Accountability in Responsible AI: Operationalizing ownership

Accountability in Responsible AI fosters a culture and sets up mechanisms essential for supporting human oversight over autonomous systems. To do that, it requires holding individuals, teams, and the technology itself before, during, and after an AI project is implemented, in line with Responsible AI principles. If an AI system does not perform as expected, it should be taken offline until it can meet set standards.

This means that everyone involved with the AI system — from the highest levels of leadership, such as executives and directors, down to managers, data scientists, and engineers — must understand their specific responsibilities and fulfill them. By embedding accountability throughout the AI project lifecycle and surfacing concerns globally, we foster the larger Responsible AI muscle need throughout an organization.

Assessing for accountability in AI

So, what does accountability look like in practice? One straightforward strategy is to create an accountability matrix that clearly defines who is responsible for each aspect of AI related activities: data scientists ensure the data is sufficiently diverse and of high quality; ML Operations staff ensure alignment with business processes; executives ensure the best use cases are selected to support broader organizational goals (optional: product teams ensure the project remains aligned with business targets). A project-level visualization, like Unilever’s, can be highly effective here.

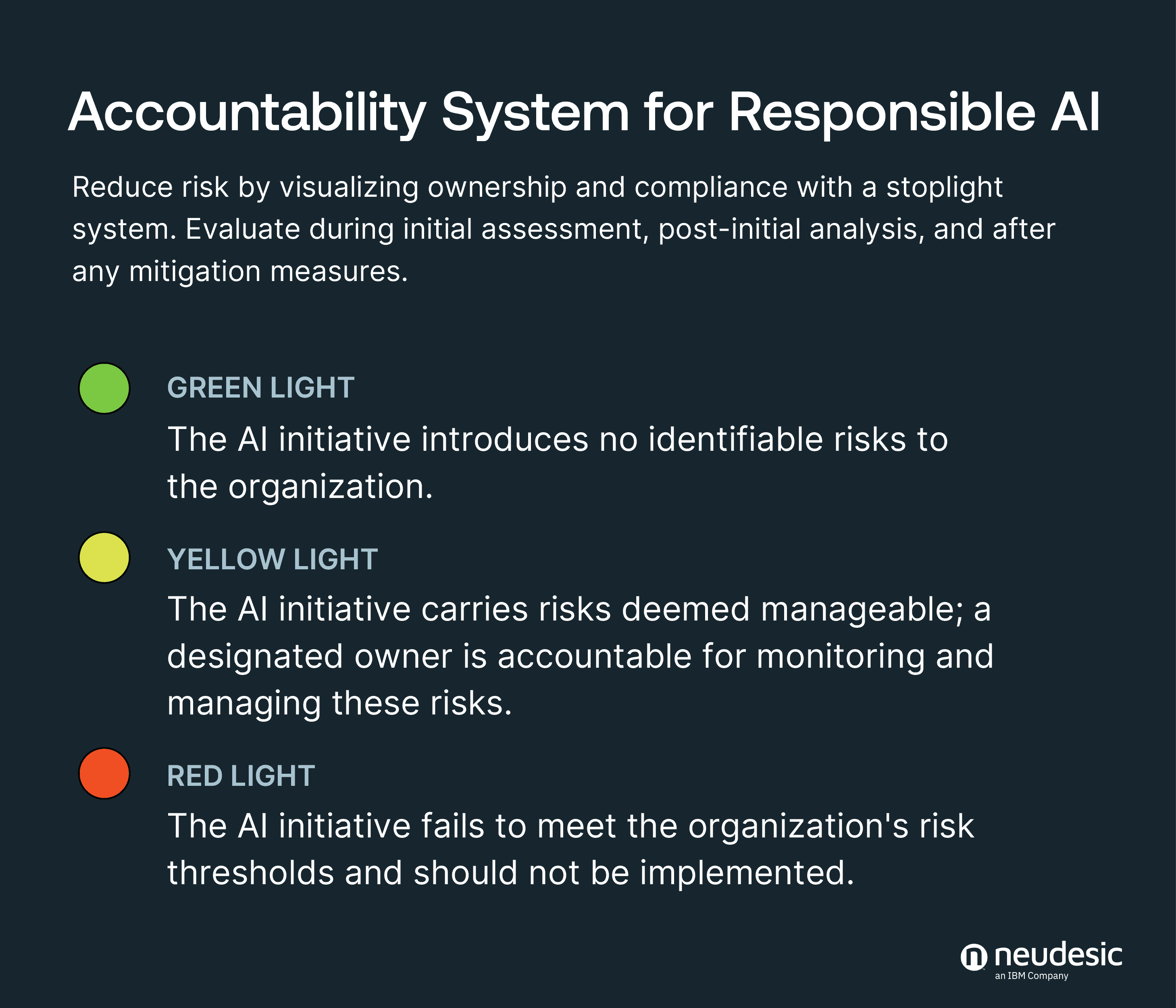

To drive quick alignment and resolution, this system surfaces a red, yellow, and green light to quickly learn the AI project's underlying Responsible AI requirements and communicate whether critical steps have been completed.

- Red means the system should not be deployed because it does not meet Unilever standards;

- Yellow shows the system has acceptable risks, but the owners of those risks and mitigation strategies must be tracked and managed on an ongoing basis;

- Green means the AI system poses little to no risk, or that earlier risks have been successfully mitigated.

Reliability in Responsible AI: Supporting system effectiveness

Truly reliable AI systems are designed to run effectively under various contexts and conditions, even those beyond their initial scope. However, any team that has experimented with artificial intelligence knows that reliability cannot be taken for granted. It becomes problematic when automated systems make inaccurate predictions or ill-informed suggestions—and a disaster when companies or their users act on these suggestions. It’s the organization's responsibility to decide when their autonomous tools need to be reined in and when they can be set free.

Two main challenges to reliability—adversarial attacks or usage and model drift—highlight the challenge and importance of reliability to an organization's brand and bottom line.

To ensure reliable AI, it's crucial to understand the vulnerabilities for abuse within your system. Microsoft learned this lesson in 2016 when they released their AI-enabled chatbot, Tay. Within 24 hours of release, internet trolls launched a coordinated data-poisoning attack that led Tay to tweet wildly inappropriate content. Microsoft had prepared for many types of threats to their system but took responsibility for this critical oversight.

And not all threats to AI reliability are adversarial; neural networks can drift into unreliability when training data becomes outdated or when model assumptions no longer align with real-world conditions. Model drift can erode the reliability of AI systems, leading to decisions or predictions that are progressively disconnected from reality, decreasing user trust and increasing operational risks. Whether a system's accuracy degrades over time or from explicit attacks, you need processes to rapidly find and correct these threats.

Assessing for reliability in AI

Fortunately, there are tools to assess and improve the reliability of an AI project. Red teaming, or setting up a group to proactively attack or flush out unintended use cases, can quickly surface unintended consequences before a project is released. It’s also a great example of the power of the inclusivity principle.

To prevent drift, IBM’s Adversarial Robustness Toolbox (ART) can assess the reliability of neural networks that classify tabular data, images, text, audio, video, etc. and help mitigate some of the risks. One ART tool, named CLEVER, measures how vulnerable a neural network classifier is to adversarial attacks. By prioritizing reliability, companies can maximize the time that their automated systems spend doing their jobs.

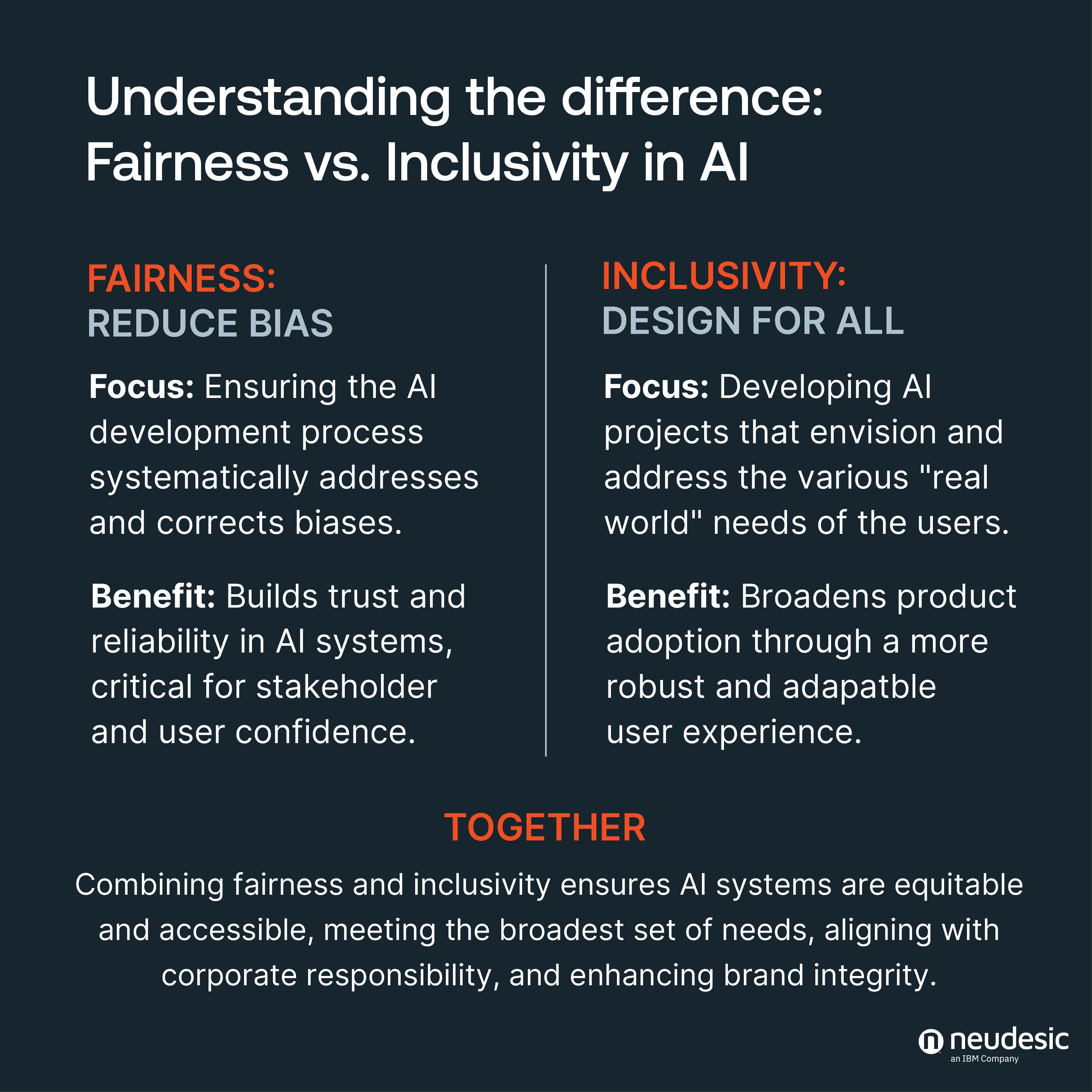

Inclusiveness in Responsible AI: Ensuring System Viability and Value

If the principle of inclusiveness in AI doesn’t bring to mind images of enterprise growth, it might be time to look closer. Inclusive AI ensures autonomous systems produce the best outcomes for a broad range of users and stakeholders. To achieve this, implementations must incorporate a variety of people and experiences to consider the different realities of its use and impact. Doing so should inform the use case, the system's design, and its deployment in the real world. By prioritizing inclusiveness, companies maximize value for a diverse user base and, consequently, for themselves.

To start, AI development teams should be diverse, representing a variety of opinions, racial backgrounds, experiences, skills, and more. Next, your training data must also be appropriately diverse as well. This may include using or buying synthetic or high-quality data sets. However, there is no replacement for understanding your users and their experiences. Gathering insights from actual users, including those who may not use the system but are affected by it, is crucial from the initial stages of discovery and design and should continue after the product's deployment.

Assessing for AI inclusivity

To manage the inclusiveness of your AI system, it’s essential to measure it. While structured guidelines for testing accessibility, such as the Web Content Accessibility Guidelines, offer a starting point, testing your product with a representative sample of your user base provides a broader perspective on how your tool engages and possibly alienates users. Identifying these pain points early in a controlled environment can be critical to the success or failure of your project.

For example, when Google developed an automated system to detect diabetic retinopathy, the image quality used for training was significantly higher than what was available in local clinics. As a result, nearly 20% of retinal scans were rejected in real-world conditions, often due to poor lighting. This inconvenience forced patients, especially those with limited transportation options or the inability to take time off work, to return on another day. Nurses also faced challenges as they had to retake images or schedule unnecessary follow-up appointments when no signs of disease were present.

To its credit, Google published its findings and lessons learned. Since then, the company has improved its tool’s inclusiveness by conducting design workshops with nurses, camera operators, and retinal specialists at future deployment sites. The system's performance has significantly enhanced. Inclusiveness is often viewed as an equity issue, but in the realm of AI, it’s also a matter of performance.

Fairness in Responsible AI: Achieving equitable outcomes

Fairness isn't just a principle; it's a process. Practicing fairness means ensuring your AI system equitably distributes opportunities, resources, and information among users and stakeholders. While the goal is to develop AI systems that do no harm, intelligent technologies that create advantages for some groups may inevitably disadvantage others. A more realistic aim is to predict how AI systems will affect subsets of stakeholders and to avoid or mitigate outcomes that disproportionately harm vulnerable groups.

The inequitable application of AI is particularly clear in hiring practices. Automated recruitment practices can perpetuate biases found in historical hiring data. An algorithmic hiring system that discriminates based on race and gender causes more problems than it solves. Intelligent applications can only add value if they ensure equitable hiring outcomes.

Assessing for fairness in AI

We are seeing a proliferation of tools and strategies to combat bias in machine learning models. Tool libraries, such as Fairlearn and AIF360, enable the design or modification of algorithms to incorporate fairness as a constraint during the training process. There are mechanisms for finding which factors most influence an outcome. For instance, if an automated hiring model favors candidates based on gender, developers could add a regularization term to the model's loss function. This term penalizes the model for discrepancies in recommendation rates between male and female candidates. By tweaking a model's learning process, we can prioritize decisions based on desired criteria (like relevant qualifications) and minimize bias-based decisions.

By implementing a systematic approach to ensuring AI fairness, organizations can avoid the downtime and embarrassment associated with the inequitable use of automated tools. Operationalizing fairness keeps your AI project on track and prevents your organization from becoming a cautionary tale.

Bridging Business Goals and AI Capabilities with Responsible AI policies and controls

Operationalizing accountability, reliability, inclusiveness, and fairness into the DNA of your artificial intelligence projects isn't just wise; it's a bold declaration that your company can identify risks and systematically address them. The keyword here is "system." These principles sit directly between business goals on one end and current AI capabilities on the other, rooting the two sides together in a way that is entirely unique to each company. Without the root system of Responsible AI, companies will find themselves floundering in a tidal wave of AI evolution, struggling to stay afloat as they feel the competitive pressure to adopt AI but lack any coherent method for doing so. Some companies will adapt to this wave of digital innovation, while others will define it. Which will you be?

There are many sides to a successful Responsible AI implementation, but experience and expertise can speed up its implementation and adoption without compromising AI's value. As Microsoft’s US AI Partner of the Year and a subsidiary of IBM (a leading voice in Responsible AI), Neudesic has helped companies navigate Responsible AI with a number of workshops, controls, and policies that we can offer to you now. Contact us to get started today.