How Enterprises Can Scale AI Successfully using Databricks

Artificial intelligence (AI) is at the heart of digital transformation (DX), enabling organizations to streamline, operate more efficiently, cut costs and respond more swiftly to ever-changing market conditions.

But deploying AI just for the sake of AI isn’t likely to yield the outcomes most organizations want. For AI to make a meaningful impact, it must be scaled across the organization and embedded in all core business processes and workflows.

If it’s done correctly, that’s where the magic can happen, as organizations gain the real-time capability to harness, clean, and manage their data and glean actionable insights from it that drive smarter business decisions. Scaling AI offers organization-wide visibility, empowering stakeholders throughout the organization to conduct thorough analysis, forecast business outcomes, take full advantage of opportunities, limit risks and drive profitability.

In fact, according to McKinsey & Co. research, companies who scale AI across the organization added as much as 20 percent to their earnings before interest and taxes.

The improvements in operational efficiencies and the bottom line are driving leaders across industries to get serious about scaling AI. Seventy-eight percent of executives surveyed by MIT for the CIO vision 2025: Bridging the gap between BI and AI report—and 96% of the leader group—say that scaling AI and machine learning to create business value is a priority over the next three years.

The leap from PoC to implementation is filled with challenges

As organizations begin to shift from piloting AI technologies to fully operationalizing them, they quickly learn that their legacy systems can’t scale, largely due to a lack of data governance at the enterprise level.

Organizations are dealing with a massive amount of data that is growing exponentially and is becoming increasingly difficult to manage, maintain, and clean. Furthermore, the data is nearly impossible to process in real time for vital decision-making. Here’s why:

- Stakeholders don’t have visibility into the data. Data is siloed across departments and teams and is housed both on-prem and in the cloud.

- Lack of data standardization. Data is collected from a wide range of sources, and it can become incompatible.

- Poor data integrity. It is challenging to maintain large sets of data, and it becomes outdated, inaccurate, or corrupted over time.

- Limited resources. Most organizations simply can’t afford to hire the number of data scientists required to clean and manage all this siloed data, much less analyze it for business insights.

The challenges are too hard for many organizations to overcome, and just a mere 36% of companies are able to deploy an ML model beyond the pilot stage, according to more McKinsey research.

Scaling AI with Databricks and Neudesic

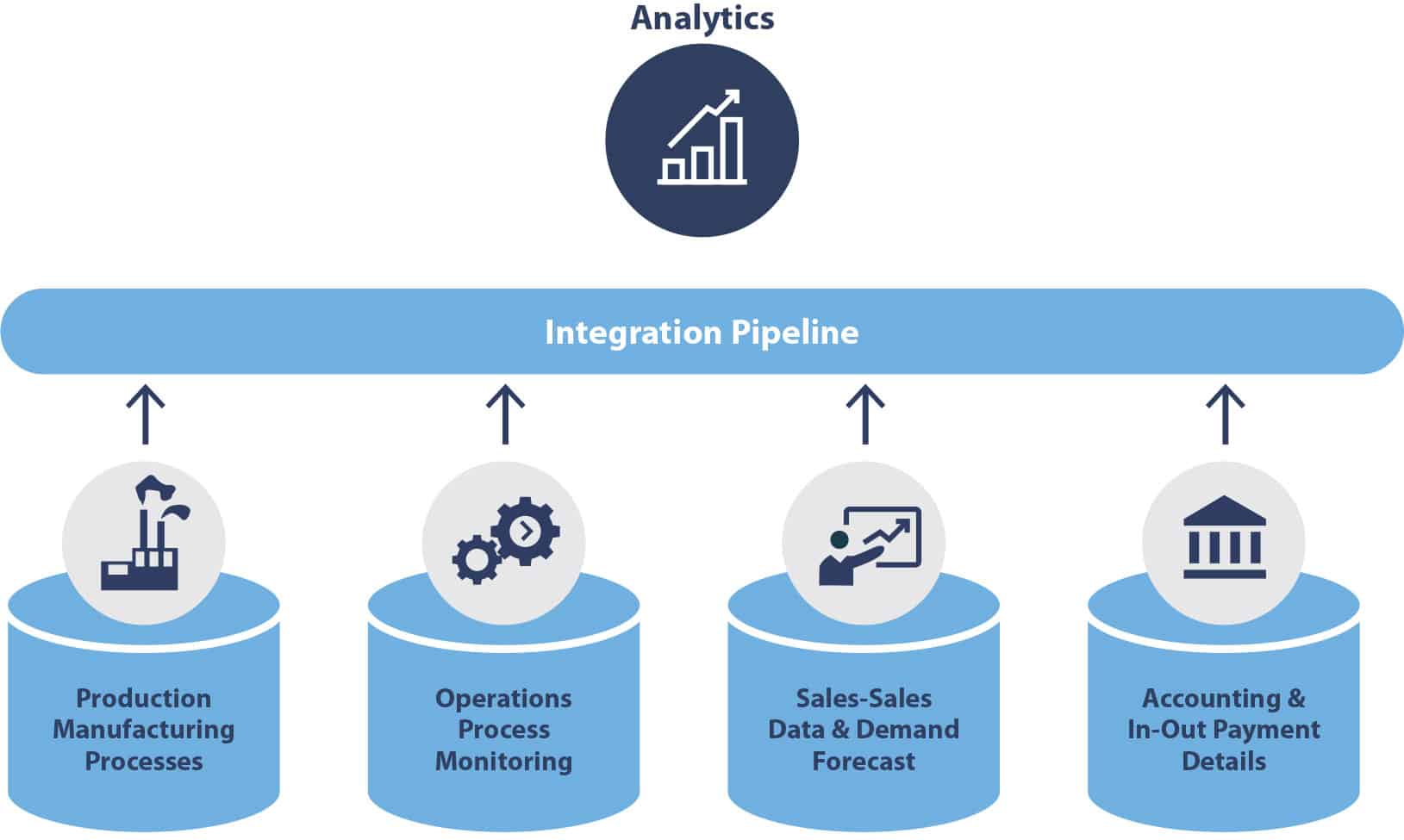

Scaling AI is attainable with the right partner and solutions. To help our clients shed their legacy systems and realize real-time data transformations, Neudesic offers the Data & AI Platform Accelerator which automates the deployment of a single enterprise cloud big data management platform with fully integrated analytics and reporting solutions.

Our solution is built on Databricks Lakehouse, given its unmatched ability to enable our clients to store, clean, and process vast amounts of data.

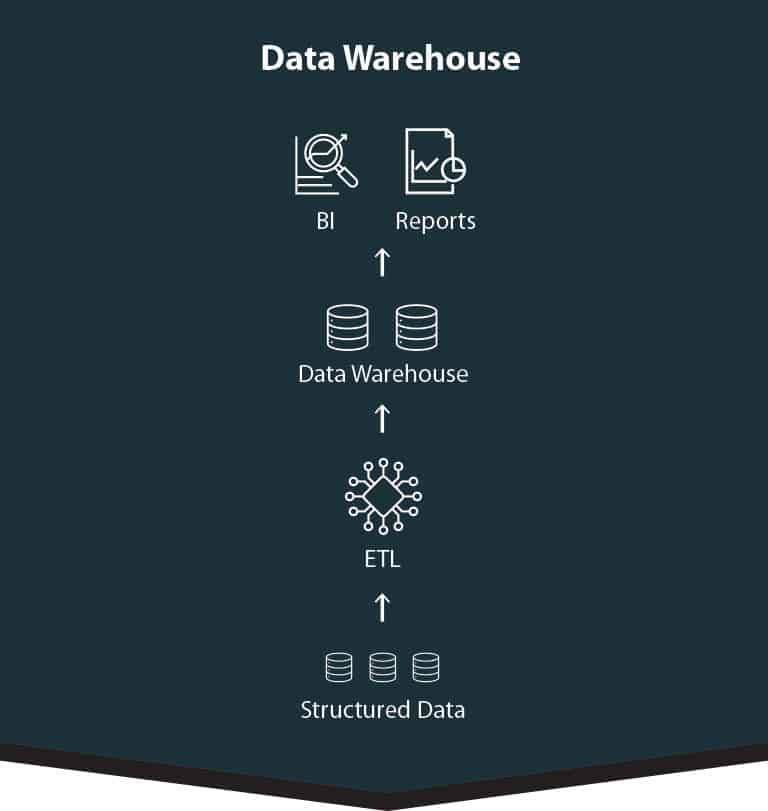

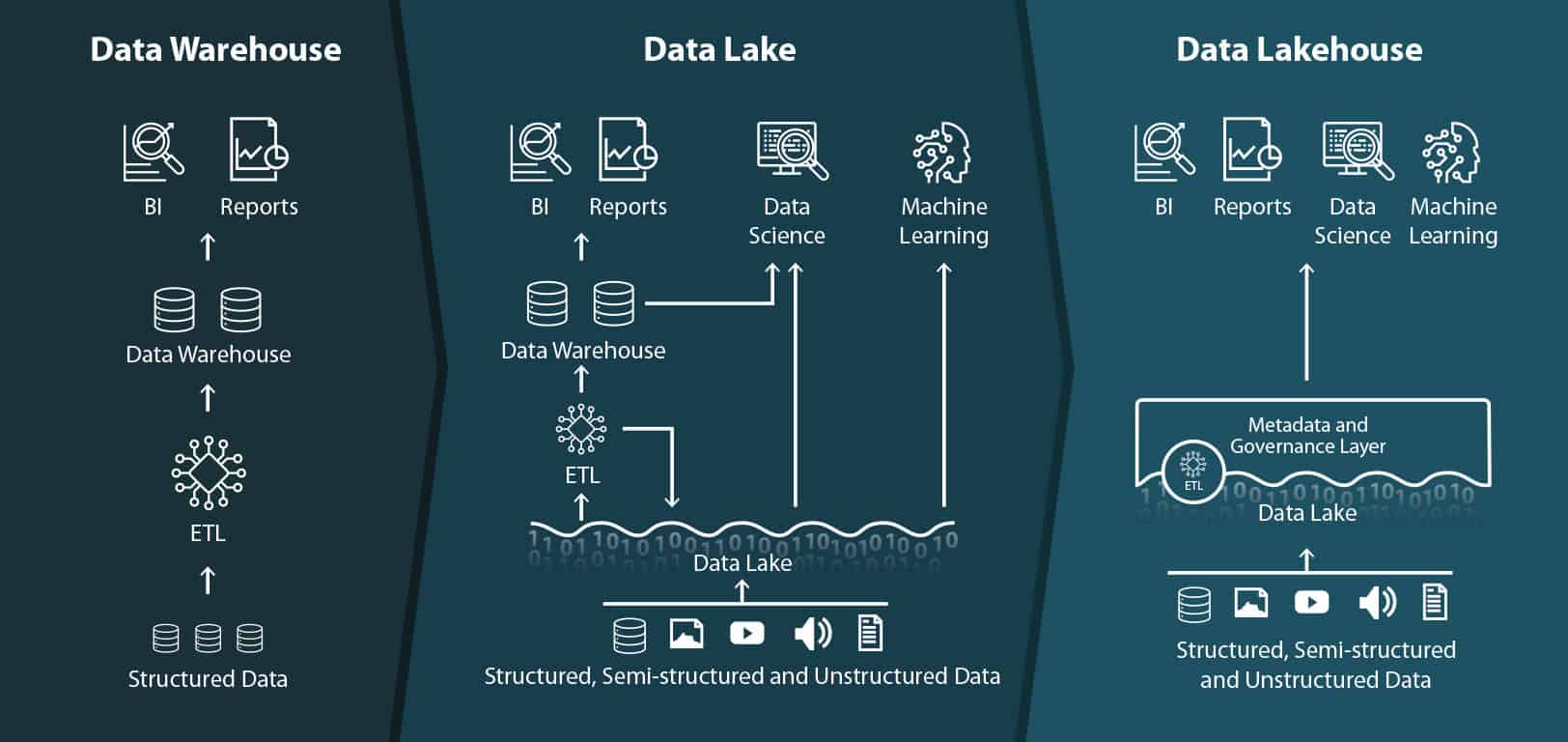

Databricks Lakehouse radically simplifies enterprise data infrastructure, reducing the need for both a data warehouse and data lake infrastructure, and it enables stakeholders to access data more quickly and put it to good use. The solution also provides these important features:

- Support for ACID transactions, which helps to ensure consistency as multiple users concurrently read or write data.

- Schema enforcement and governance, enabling the platform able to reason about data integrity and provide robust governance and auditing mechanisms.

- BI support, which enables the use of BI tools directly on the source data to reduce staleness, improve recency and latency, and eliminate the cost of operationalizing two copies of data in a data lake and a data warehouse.

- Storage is decoupled from compute, so the systems can scale to many more concurrent users and larger data sizes.

- Open, standardized storage formats and possesses an API, so a variety of tools and engines, including machine learning, can efficiently access the data directly.

- Support for diverse data types, ranging from unstructured to structured data, is needed for new data applications, including images, video, audio, semi-structured data, and text.

- Support for diverse workloads, including data science, machine learning, SQL, and analytics.

- End-to-end streaming eliminates the need for separate systems dedicated to serving real-time data applications.

Ultimately, we chose Databricks to be foundational to the Data & AI Platform Accelerator because we want to empower our clients to leverage their data to do good for their business.

Case in point: When a global rental company wanted to consolidate data that was siloed over five data platforms and make it available for enterprise-wide integration, the organization chose Neudesic to lead the transformation. For the client, the choice was simple because with the Data & AI Platform Accelerator, everything from data ingestion through to the consumption layer is built on Databricks.

Now, the organization will gain near real-time automated data feeds, and stakeholders across the organization will gain visibility into operational metrics. Additionally, the new platform will reduce refresh times on the customer-facing reservation board from 2+ hours to 3 minutes. That’s what Databricks can enable, and it’s why Neudesic makes it integral to our Data & AI Platform Accelerator.

Interested in learning more about how Neudesic leverages Databricks? Register for our webinar in December here!

Resources

Learn more about Data & AI here.

Neudesic AI use case samples:

Leading National Auto Insurance Company

Driving processing efficiency & reducing operational costs by 90% with Neudesic’s Document Intelligence Platform & DPi30.

Leading Multinational Reinsurance Corporation

Driving $3.3M cost reduction & 99% increase in processing efficiency with Neudesic’s Document Intelligence Platform.